You have the perfect engineering dashboard, now what?

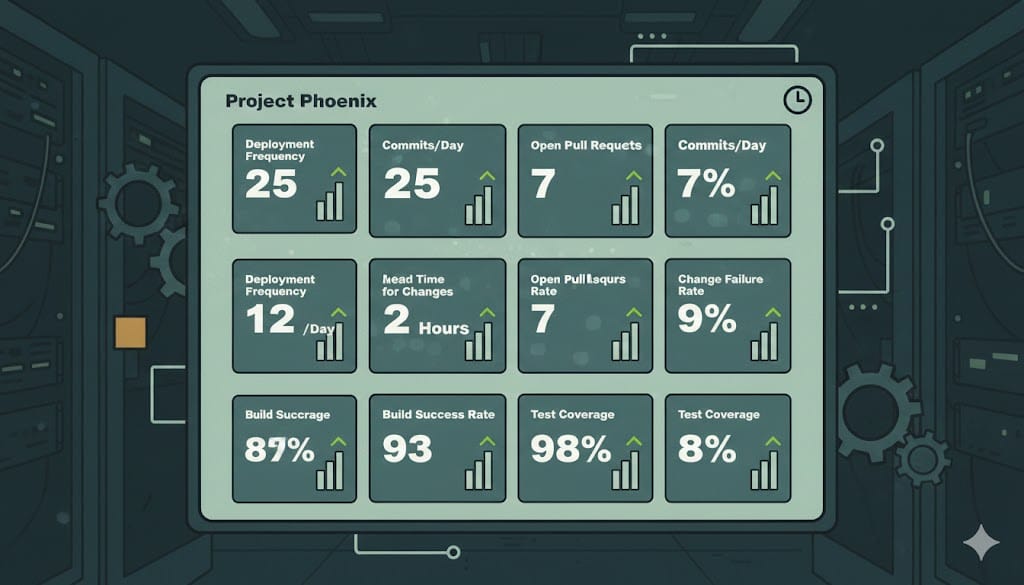

I ask this question in almost every conversation with an engineering leader who's just gotten serious about visibility into their org: "Imagine you have the perfect engineering dashboard... every metric you could ever want, clean data, real-time. Now what?"

The room usually goes quiet.

Not because they don't have thoughts. It's because many leaders treat the dashboard as the destination. Getting exec buy-in, getting the tooling in place, getting the metrics flowing... it all feels like progress, and it is. But it's the easier of the two problems they need to solve.

The Data Gives You the Argument. Not the Answer.

The truth is that visibility is not change. A dashboard is permission to have a harder conversation, not the conversation itself. The number sitting in your issue cycle time chart doesn't tell you which team to talk to first, what's causing the slowdown, how to frame the ask, or how to sustain the improvement once you've made it.

Those are judgment calls. And they require something the tooling can't give you. The leaders I've seen actually move organizations understand this from the start.

Three Places Teams Get Stuck

In practice, the "now what?" problem shows up in three places, and they compound if you don't catch them early.

The signal isn't the full truth. Raw metrics without context are noise. A spike in deployment frequency isn't automatically good news. A drop in PR cycle time might mean engineers are cutting corners. Without someone who understands what the numbers mean in the context of your specific org, your teams, and your current priorities, the data just sits there looking credible.

The interpretation doesn't get alignment. This is the more common failure. The leader knows what the data is telling them. They've done the analysis, they've drawn the conclusion, and then they bring it to their teams and it lands like a wet blanket. People push back, get defensive, or nod politely and do nothing. Or worse still, interpretations differ and you've got dissonant messaging/initiatives all happening in parallel. The data didn't fail. The change management did.

The intervention doesn't get measured. Teams rally, make changes, run the initiative, and then never check whether it worked. Six months later, the dashboard looks basically the same. Nobody's sure if the thing they did had any effect. The loop never closes.

This Is Change Management, Not Data Work

That second failure is the one worth focusing on, because it reveals where the hard work really is.

Everything after the dashboard is a people problem. The skills that matter aren't analytical, they're organizational. Getting momentum behind an initiative. Creating genuine ownership rather than assigned accountability. Giving different personalities space to process and react before you call for commitment. Building alignment around a shared end-state vision, not just a shared metric.

These are classic change management fundamentals, and most engineering leaders don't think of themselves as change managers. They think of themselves as technical leaders who now have data. That mindset shift matters.

The data gives you leverage. You still have to do the work.

Build the Ladder

The most practical thing I've seen work: agree on the metrics first, then build your goals up from them.

I'm a fan of the OKR framework, particularly how OKRs ladder up. Done well, they create a chain of accountability from executive vision down to daily engineering work.

At the team level, those are granular delivery signals such as issue cycle time, PR throughput, deployment frequency. At the director or VP level, you're looking at flow across teams: lead time, quality trends, how investment is distributed across new features versus maintenance versus technical debt. At the executive level, the question shifts to whether engineering capacity is pointed at the right things and whether that effort is translating into outcomes.

When you build OKRs up from those metrics, the ladder becomes coherent. A company-level objective around delivery speed means something specific to the VP: a cycle time target, a deployment frequency threshold. And it means something specific to the team: the practices and commitments that will actually move it. The metric connects the levels and keeps your goals from becoming nested wish lists.

What quickly becomes apparent is: if your measurement is siloed, manual, or inconsistent across teams, you can't build a trustworthy ladder. The OKR is only as credible as the data underpinning it, and leaders who try to run this playbook on top of unreliable metrics will eventually lose the room.

The Work Starts Here

The leaders who make the most of their engineering visibility are the ones who understand that the dashboard was the beginning, not the destination.

Getting the data flowing is hard and it's absolutely worth doing...but the ROI only materializes when someone is willing to do the unglamorous work that comes after: interpreting those signals, building alignment, driving the change, and closing the loop.

That's the real work.